ERASE: Error-Resilient Representation Learning on Graphs

for Label Noise Tolerance

Ling-Hao Chen1,2,

Yuanshuo Zhang2,3,

Taohua Huang3,

Liangcai Su1,

Zeyi Lin2,3,

Xi Xiao1,

Xiaobo Xia4,

and

Tongliang

Liu4

1Tsinghua University, 2SwanHub.co, 3Xidian University, 4The University of Sydney

Abstract

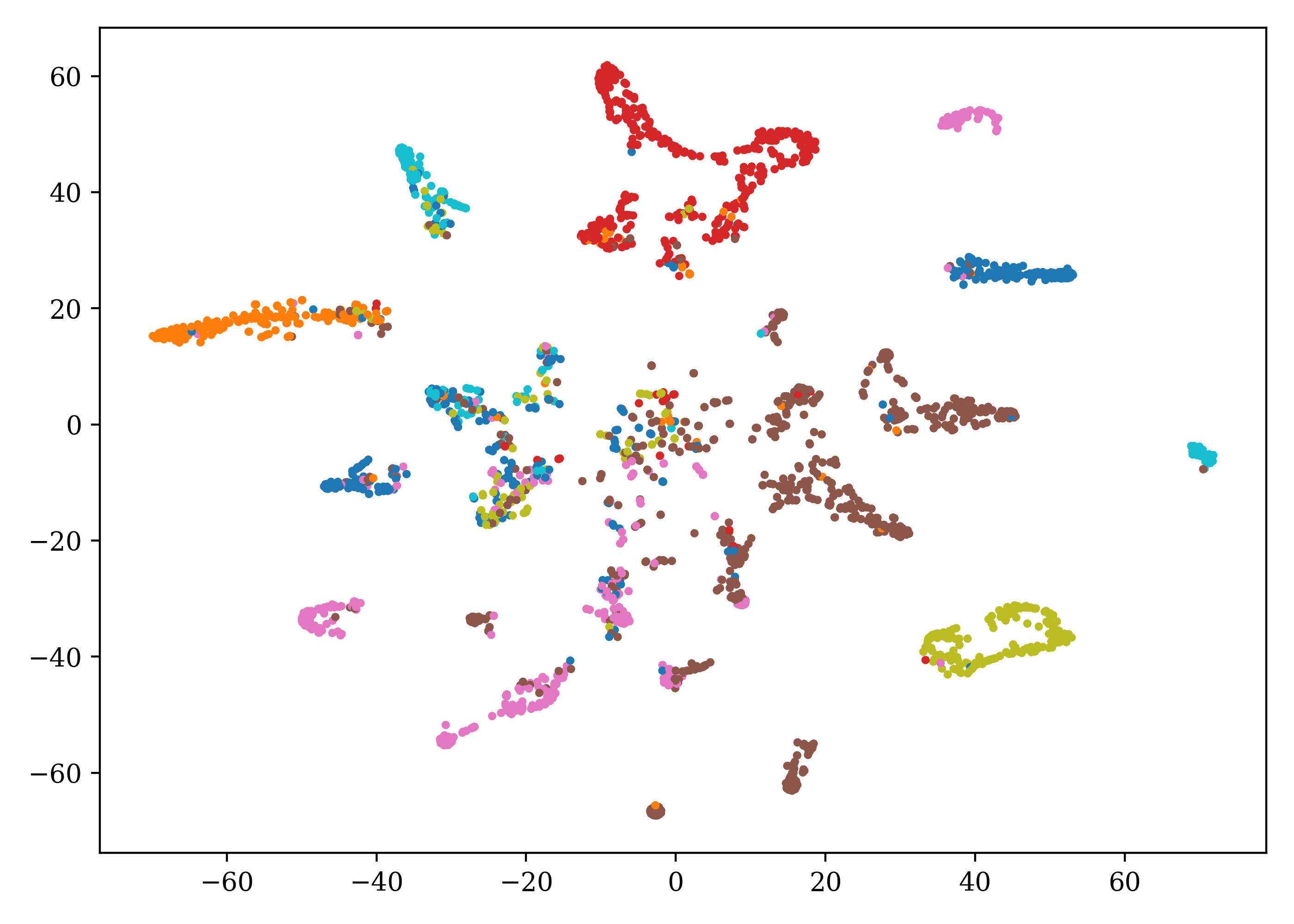

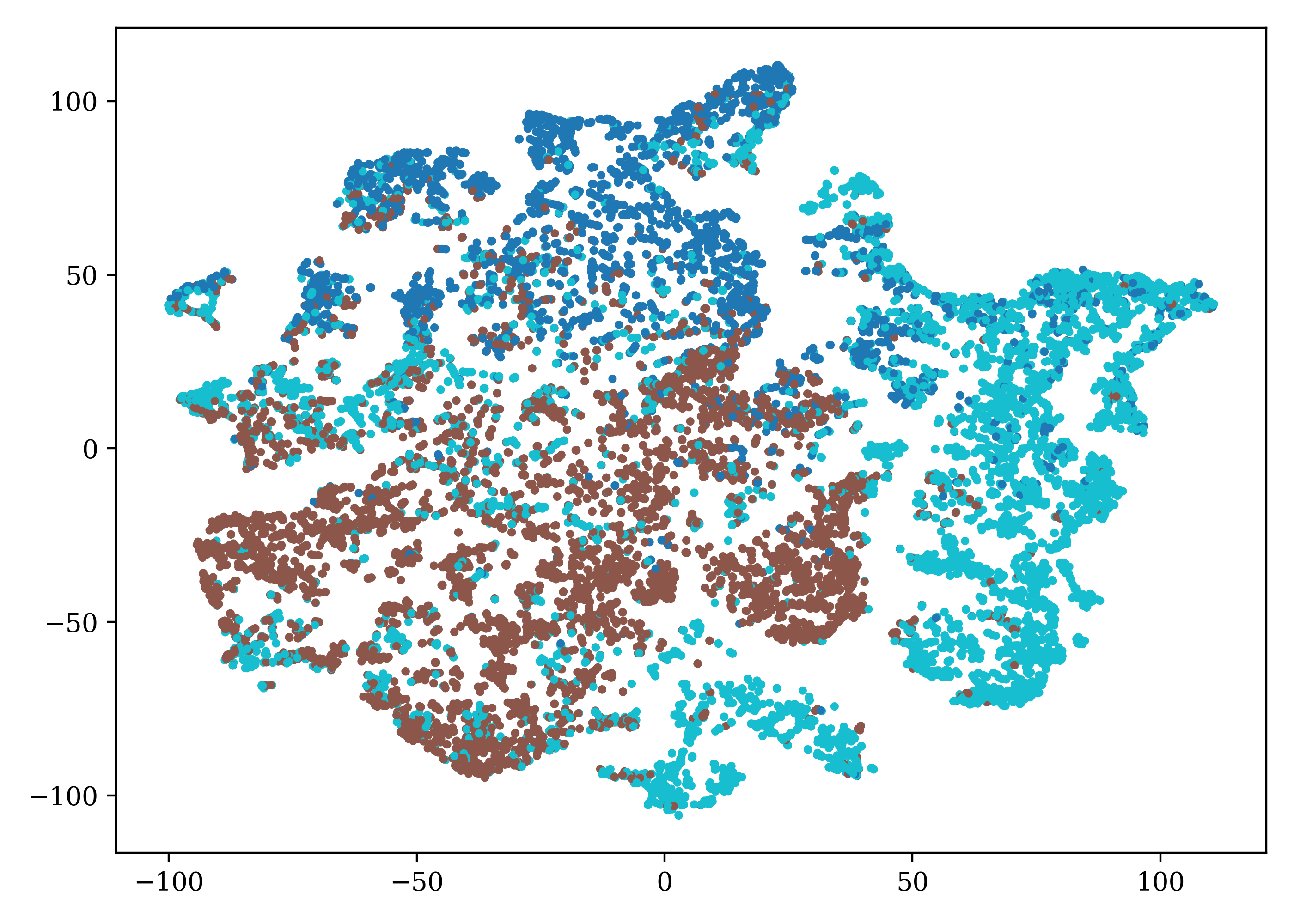

Deep learning has achieved remarkable success in graph-related tasks, yet this accomplishment heavily relies on large-scale high-quality annotated datasets. However, acquiring such datasets can be cost-prohibitive, leading to the practical use of labels obtained from economically efficient sources such as web searches and user tags. Unfortunately, these labels often come with noise, compromising the generalization performance of deep networks. To tackle this challenge and enhance the robustness of deep learning models against label noise in graph-based tasks, we propose a method called ERASE (Error-Resilient representation learning on graphs for lAbel noiSe tolerancE). The core idea of ERASE is to learn representations with error tolerance by maximizing coding rate reduction. Particularly, we introduce a decoupled label propagation method for learning representations. Before training, noisy labels are pre-corrected through structural denoising. During training, ERASE combines prototype pseudo-labels with propagated denoised labels and updates representations with error resilience, which significantly improves the generalization performance in node classification. The proposed method allows us to more effectively withstand errors caused by mislabeled nodes, thereby strengthening the robustness of deep networks in handling noisy graph data. Extensive experimental results show that our method can outperform multiple baselines with clear margins in broad noise levels and enjoy great scalability. Codes are released at https://github.com/eraseai/erase.Video

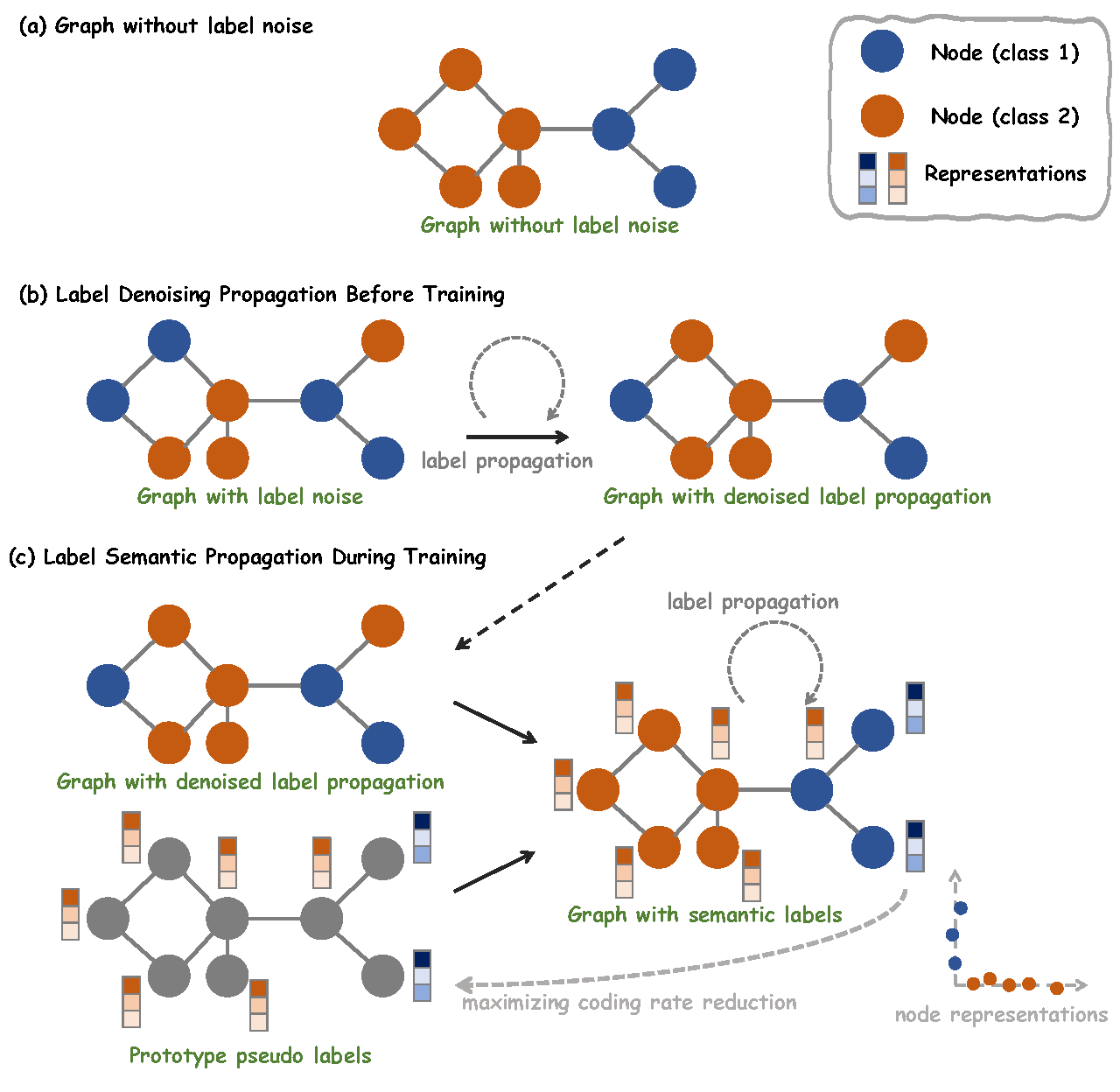

Overview of ERASE

The procedure of ERASE. (a) A graph without label noise. (b) For a graph with label noise, before training, we perform label denoising on all training nodes. (c) During training, we estimate the prototype labels with learned representations and combine them with denoised labels to obtain the semantic labels. We update representations with semantic labels via maximizing coding rate reduction.

Citation

@article{chen2023erase,

title={ERASE: Error-Resilient Representation Learning on Graphs for Label Noise Tolerance},

author={Chen, Ling-Hao and Zhang, Yuanshuo and Huang, Taohua and Su, Liangcai and Lin, Zeyi and Xiao, Xi and Xia, Xiaobo and Liu, Tongliang},

journal={Arxiv 2312.08852},

year={2023}

}